Recently, Associate Professor Peng Yijie's research team from the Department of Management Science and Information Systems at Peking University's Guanghua School of Management, who also serves as the Executive Director of the Center for Multi-Agent and Social Intelligence at the Institute for Artificial Intelligence, published a paper titled "An Efficient Node Selection Policy for Monte Carlo Tree Search with Neural Networks" in the high-impact journal INFORMS Journal of Computing.

In these years, fields such as intelligent operations management, manufacturing, and robotic control have been facing increasingly large-scale decision-making challenges. Central to these challenges is the need to accurately identify optimal action plans within vast action spaces, a task that often renders traditional rule-based search methods computationally infeasible. Monte Carlo Tree Search (MCTS), an efficient algorithm that combines the randomness of Monte Carlo simulations with the precision of tree search, effectively addresses complex and large-scale decision problems. It has demonstrated unique advantages and potential in areas like autonomous driving, computer games, and combinatorial optimization problems. The success of AlphaGo in the field of artificial intelligence has ushered in a new trend of integrating value networks and policy networks into MCTS to further enhance algorithm performance.

Figure1.Monte Carlo Tree Search (MCTS)

MCTS essentially tackles a black-box system simulation optimization problem. In classical MCTS, the node selection strategy employs the Upper Confidence Bounds applied to Trees (UCT) algorithm, which effectively balances exploration and exploitation during node selection. However, the framework used to derive the UCT algorithm differs from that of the MCTS problem, and it does not fully utilize the information obtained during simulation sampling. This study models the node selection problem in MCTS as a multi-stage Ranking and Selection (R&S) problem, a framework more aligned with MCTS. The research extends asymptotically optimal simulation budget allocation strategies used for solving R&S problems into a node selection strategy for tree search. By balancing action values and variances, this strategy efficiently allocates limited simulation resources to maximize the probability of correctly selecting the optimal action. Furthermore, the study integrates value neural networks and policy neural networks into the proposed node selection strategy, providing the algorithm with prior information and optimal action identification insights, thereby enhancing the strategy's performance.

Figure2.Experimental Results on Tic-Tac-Toe

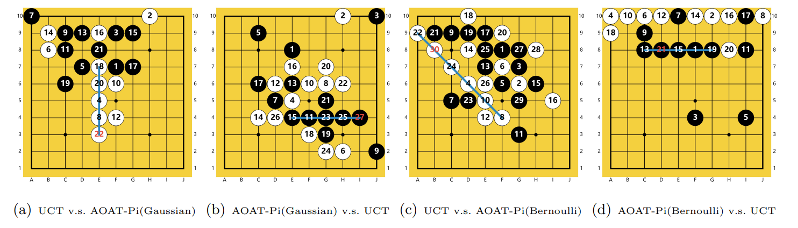

Figure3.Experimental Results on Gomoku

Figure4.Experimental Results in the Reinforcement Learning Inverted Pendulum Environment

The proposed algorithm was applied to computer games such as Tic-Tac-Toe and Gomoku. Numerical results indicate that, without incorporating any neural network information, the algorithm significantly improves the probability of correctly identifying optimal actions compared to the classical UCT strategy. When combined with neural network information, the algorithm achieves a higher win rate in gameplay compared to the UCT strategy used in AlphaGo Zero. Additionally, in tests within the OpenAI CartPole environment, the algorithm attains higher game scores under the same number of iterations compared to the UCT strategy employed in MuZero. Further numerical tests validate the effectiveness of value networks and policy networks in enhancing algorithm performance. This research highlights the potential of extending dynamic simulation budget allocation strategies into node selection strategies within MCTS, suggesting that applying this novel MCTS approach to large-scale decision problems warrants further in-depth investigation.

Liu Xiaotian, a doctoral student in the School of Industrial and Systems Engineering at Georgia Institute of Technology, is the first author of the paper, and Yijie Peng is the corresponding author. Co-authors include Gongbo Zhang and Ruihan Zhou, an assistant researcher and a doctoral student from Guanghua School of Management, Peking University.