Human interaction with the environment is mediated through the hands, enabling various operations that are both routine and essential. For robots, the medium of interaction with the environment is the end effector, which can take different forms—such as a gripper, a suction cup, or, akin to humans, a dexterous hand. Teaching robots to use dexterous hands is of great significance, as they require human-like hands to operate tools specifically designed for humans and seamlessly integrate into human environments. However, to this day, manipulating objects—even basic grasping—using dexterous hands remains a challenging and unresolved problem.

Figure 1: Robot hand grasping a toy cat.

Recently, Assistant Professor Zhu Yixin's research group from the Institute for Artificial Intelligence at Peking University, in collaboration with partners, has made significant progress in enabling dexterous manipulation with strong generalization across categories of objects. Their paper, titled "SparseDFF: Sparse-View Feature Distillation for One-Shot Dexterous Manipulation," was published at the top-tier computer vision conference, CVPR 2024.

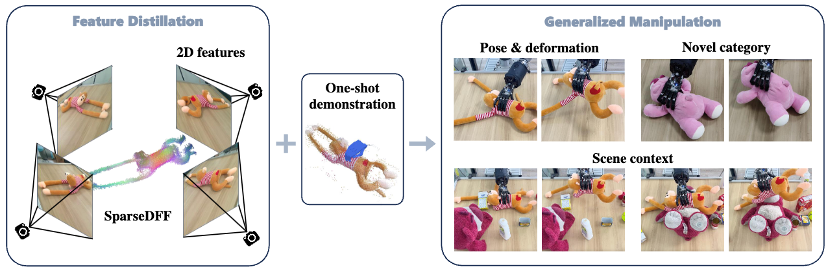

The research have developed SparseDFF—a deep feature learning approach for dexterous manipulation. SparseDFF optimizes 2D visual features from large models through distillation while constructing 3D feature fields, enabling robots to comprehend semantic correspondences across different instances. This method facilitates efficient one-shot transfer of dexterous manipulation skills across various scenarios. Real-world experiments demonstrate that SparseDFF is the state-of-the-art (SOTA) dexterous grasping method under the same experimental settings.

In the field of artificial intelligence, there is a growing interest in enabling machines to possess the ability to generalize from limited examples, akin to human learning. Traditional methods such as imitation learning and reinforcement learning often face challenges like high data requirements, poor generalization, and low success rates. Drawing inspiration from human learning processes, which involve deep understanding of geometric structures and semantic information

Figure 2: Similar grasping postures can be aligned in high-dimensional feature space.

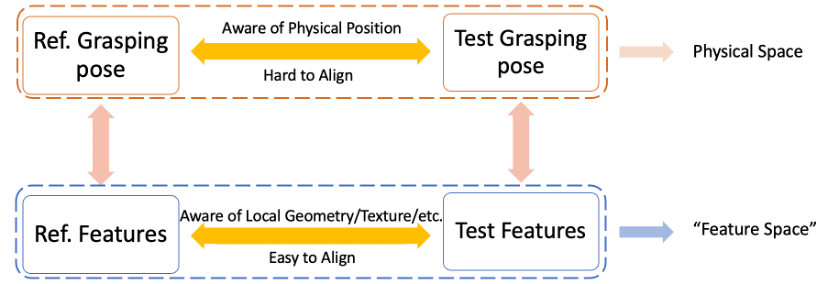

In traditional computer vision, feature representations are often used to convey image content, making direct alignment of robotic postures challenging. To address this, researchers have proposed constructing High-level Feature Fields that encapsulate semantic, geometric, and visual information. By mapping demonstration grasping postures and initialized grasping postures to the feature spaces and aligning them with optimizing methods, robots can effectively learn and replicate complex manipulation tasks.

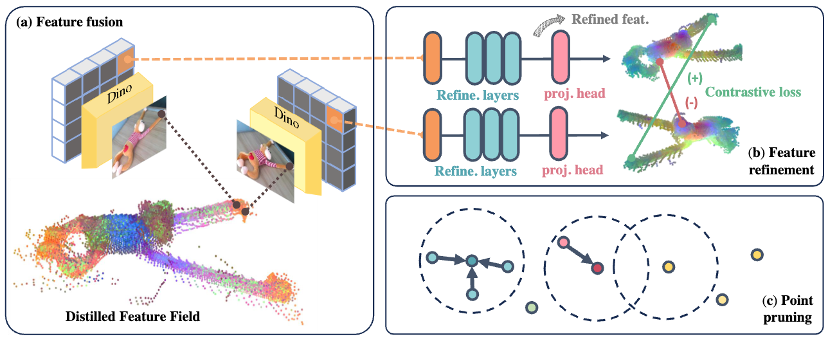

Figure 3: Schematic diagram of the SparseDFF method.

The SparseDFF approach requires only a single demonstration to transfer manipulation skills to various object postures. By leveraging pre-trained visual models like DINO for feature distillation, it meets the demands for minimal training data, robust generalization, and high success rates. Empirical results demonstrate that SparseDFF outperforms existing methods under the same experimental settings.

Figure 4: Constructing distilled feature fields.

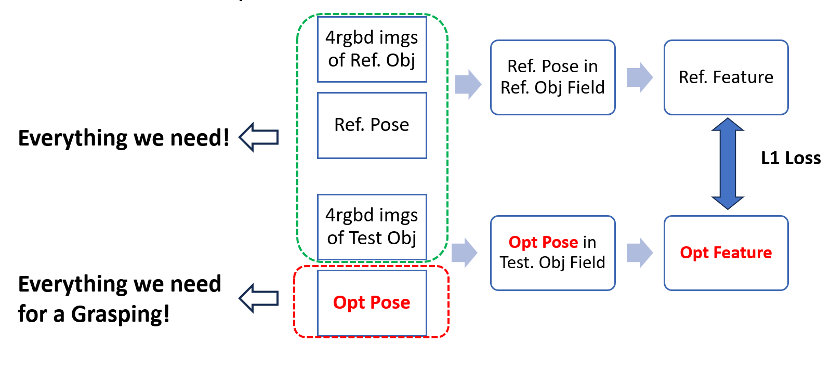

As shown in Figure 4(a), the researchers adopted a 3D feature distillation approach to construct a 3D feature field. Unlike previous methods that directly reconstruct a continuous implicit feature field using NeRF representations, we apply DINO to RGB images and refine discrete 3D points, propagating them into the surrounding space. By leveraging the correspondence between pixels and points, we directly project these features onto each point cloud, generating features for each point.

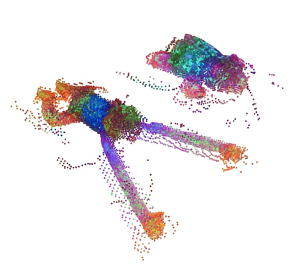

Figure 5: Feature field before optimization.

Figure 6: Feature field after optimization.

After initially constructing the pre-optimized feature field (Figure 5) with point clouds, the collaborating team designed a feature optimization network. This network was trained using self-supervised learning in a single scene. Once training was complete, they directly applied the feature optimization network to new experimental scenarios to enhance the continuity between features, thereby achieving higher-quality features. Additionally, they implemented a pruning mechanism, as illustrated in Figure 4(c), to further improve the consistency of the generated point cloud features.

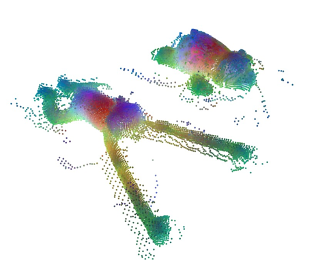

The team utilized the pruned point cloud to extract dexterous hand features. By analyzing the obtained feature differences, they performed end-effector optimization to minimize discrepancies between experimental and demonstration objects, ultimately determining the final grasping pose.

Figure 7: End-effector optimization.

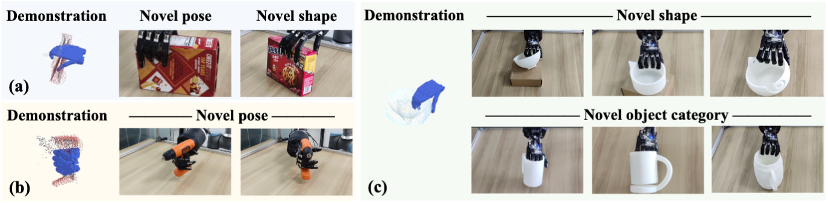

The collaborating team conducted quantitative tests on various objects using the proposed algorithm. In rigid object tests, they evaluated the method on a drill, two types of boxes, three types of bowls, and three types of cups, comparing its success rate with UniGraspDex++ and DFF. Experimental results demonstrated that the proposed algorithm significantly improved the success rate for both varying poses of the same object and generalization to different objects, with particularly notable performance in the latter.

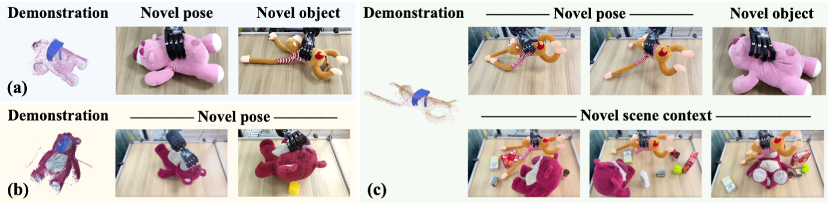

For flexible object tests, they experimented with a plush monkey and a plush bear, generalizing the grasping features of the plush monkey to different poses of the same toy, a monkey surrounded by clutter, and a small plush bear. This validated the proposed method’s advantages in pose generalization, robustness to cluttered environments, and consistency across different objects. Additionally, they compared the proposed method with DFF on the task of grasping the nose of a large plush bear, further generalizing the features of the plush bear to different poses of both plush bears and monkeys. The results confirmed the model’s stability in generalizing to different objects.

Figure 8: Rigid object experiments.

Figure 9: Flexible object experiments.

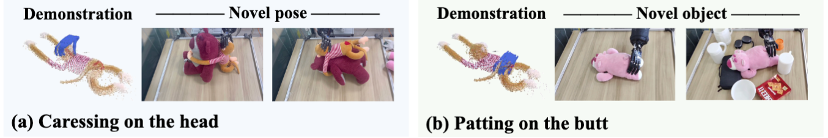

Figure 10: Semantically rich manipulation tasks.

Beyond grasping, the proposed method extends to various manipulation tasks, demonstrating robust generalization to new object poses, deformations, geometries, and categories in real-world scenarios.

This research addresses the challenge of enabling robots to learn dexterous manipulation skills from limited demonstrations, providing a promising approach for robots to adapt to diverse real-world scenarios.

The first author of the paper is Qianxu Wang, an undergraduate student from the 2021 cohort at Peking University's School of Electronics Engineering and Computer Science. The corresponding authors are Professors Yixin Zhu, and Professors Leonidas Guibas, along with PhD candidate Congyue Deng from Stanford University.